Out of all features Apple announced at its Worldwide Developer Conference (WWDC) last week, a new addition to Apple’s Visual Lookup system — a feature where you can “pick up” an object from a photo or a video with just a press of your finger — was the most fun.

While Apple introduced a number of interesting new features to its software during the event, those didn’t quite deliver the surprise and joy that comes from using the deceptively simple new photo cutout feature — an enhancement to Apple’s existing Visual Lookup system. Launched last year with iOS 15, Visual Lookup today recognizes pets, plants, landmarks, and other objects in your photos. But now, when you touch and hold on the subject of the image, you can actually lift it away from the background to use in other apps.

Essentially, the feature separates the photo’s background from the subject, then turns the subject into a separate image. This image can then be saved or dragged and dropped into a messaging app like iMessage or WhatsApp.

Apple’s new visual lookup feature

During the keynote, Apple described the addition as the product of an advanced machine learning model, which is accelerated by CoreML and the neural engine to perform 40 billion operations in milliseconds.

In our early tests on the iOS 16 developer beta, the feature works surprisingly well with animals, humans, and objects. We’ll likely see this feature used to create memes and uncountable animal stickers when it goes live.

Object separation has been a long-sought feature in all photo editing apps. Before, you would have to use apps like Photoshop or Pixelmator to meticulously draw a boundary to select an object and port it to another image. Tools like remove.bg and Canva’s background remover have made the process easier, but it’s amazing to have this feature built into the iPhone’s operating system. Apple said the feature “feels like magic,” and in this case, the hyperbole is not entirely off base. This feature surprises.

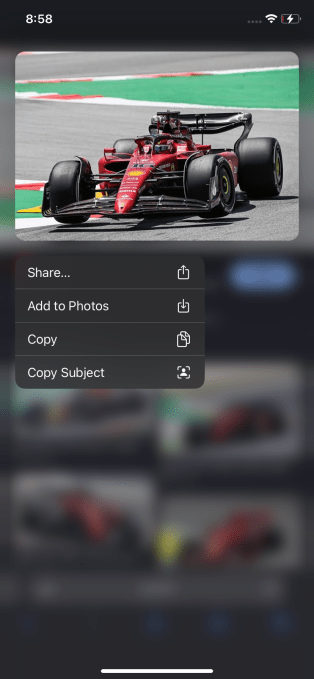

It’s also not limited to photos, as it turns out. Apple leveraged the new functionality to enhance other apps, too. For instance, if you long-press on an image in Safari, you’ll see an option called “Copy Subject,” which does the same thing as Visual Lookup: removing the background.

Apple’s Visual Lookup feature… but with a new name

A post on Reddit also suggested that this feature is present in the Files app, too. When you select an image, then go to options you’ll find it available as “Remove Background.”

And on the Lock Screen, Apple’s ability to understand which part of the photo is the subject allows it to create a layered look where part of the photo can overlay on top of various Lock Screen elements, like the date and time.

This feature isn’t meant to replace more advanced image editing tools, of course. While you may use it to create a lot of collages, memes, and posters without having to put in too much effort, you’ll still need image editing software like Pixelmator or Photoshop on the desktop or something like Snapseed if you want to superimpose the separated object onto another picture, for example.

Apple’s not the only company that’s showing its A.I. chops related to object separation. Last year, Google introduced the Magic Eraser feature with the Pixel 6, which allows you to remove unwanted objects from a photo while keeping other parts of the image intact.

Image Credits: Google

Both these features have their own merits, and both are extremely useful in day-to-day photo-editing. It would be great if Google and Apple decide to copy each other to include both these features in their respective mobile operating systems.

The new Visual Lookup feature is just one of many features Apple introduced in the updated iOS 16 Photos app. As TechCrunch noted last week, new additions like password protection for hidden photos, and the ability to copy and paste styles will likely entice people to use iPhone’s in-built editing tools more.

The new feature was among several other notable improvements announced during WWDC. One of the most debated is Stage Manager, a new addition focused on improved multitasking for iPadOS and macOS, which represents a sizable change to the user interface. Meanwhile, the Live Activities feature can give you real-time updates from apps — like a sports match that you’re interested in or the location of an Uber ride or pizza delivery. But this is more of a practical update, not necessarily a “fun” one.