Technical interviews are a black box — candidates usually are told whether they made it to the next round, but they rarely find out why.

Lack of feedback isn’t just frustrating for candidates; it’s also bad for business. Our research shows that 43% of all candidates consistently underrate their technical interview performance, and 25% of all candidates consistently think they failed when they actually passed.

Why do these numbers matter? Because giving instant feedback to successful candidates can do wonders for increasing your close rate.

Giving feedback will not only make candidates you want today more likely to join your team, but it’s also crucial to hiring the people you might want down the road. Technical interview outcomes are erratic, and according to our data, only about 25% of candidates perform consistently from interview to interview.

This means a candidate you reject today might be someone you want to hire in 6 months.

But won’t we get sued?

I surveyed founders, hiring managers, recruiters and labor lawyers to understand why anyone who’s ever gone through interviewer training has been told in no uncertain terms to not give feedback.

The main reason: Companies are scared of being sued.

As it turns out, literally zero companies (at least in the U.S.) have ever been sued by an engineer who received constructive post-interview feedback.

People don’t get defensive because they failed — it’s because they don’t understand why and feel powerless.

A lot of cases are settled out of court, which makes that data much harder to get, but given what we know, the odds of being sued after giving useful feedback are extremely low.

What about candidates getting defensive?

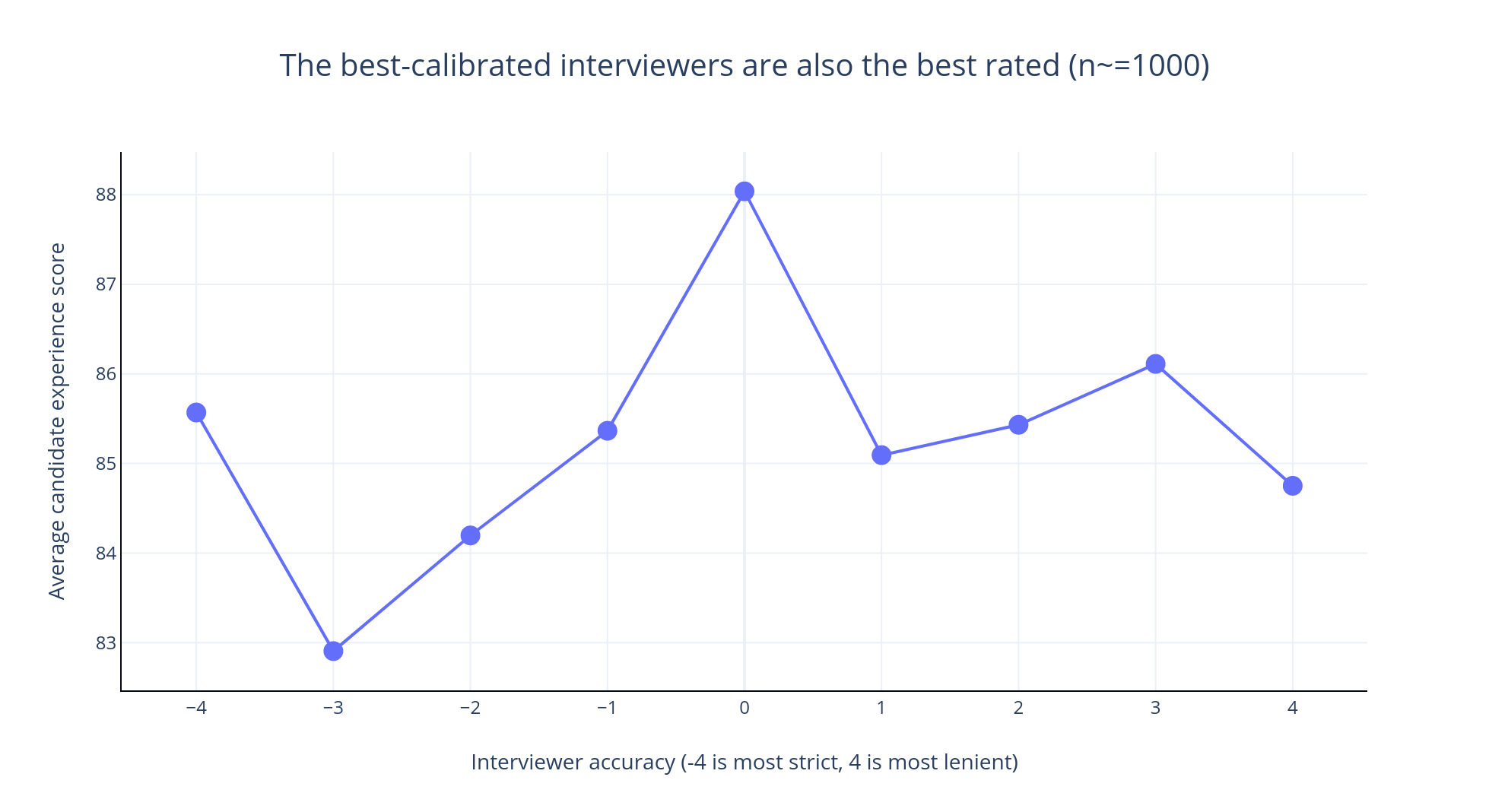

For every interviewer on our platform, we track two key metrics: candidate experience and interviewer calibration.

The candidate experience score is a measure of how likely someone is to return after talking to a given interviewer. The interviewer calibration score tells us whether a given interviewer is too strict or too lenient, based on how well their candidates do in subsequent, real interviews. If someone continually gives good scores to candidates who fail real interviews, they’re too lenient, and vice versa.

When you put these scores together, you can reason about the value of delivering honest feedback. Below is a graph of the average candidate experience score as a function of interviewer accuracy, representing data from over 1,000 distinct interviewers (comprising about 100,000 interviews):

Image Credits: Aline Lerner

The candidate experience score peaks right at the point where interviewers are neither too strict nor too lenient, but are, in Goldilocks terms, “just right.” It drops off pretty dramatically on either side after that.

To improve close rates for technical interviews, give applicants feedback (good or bad) by Ram Iyer originally published on TechCrunch