Google Cloud today announced a slew of new AI-powered features for its productivity tools, but the company also today launched a set of new APIs and tools for developers that are just as interesting — if not more so. In addition to making its large language models available to developers through an API, Google also today launched MakerSuite, a new browser-based tool that will make it easier for developers to build AI-powered applications on top of Google’s foundation models.

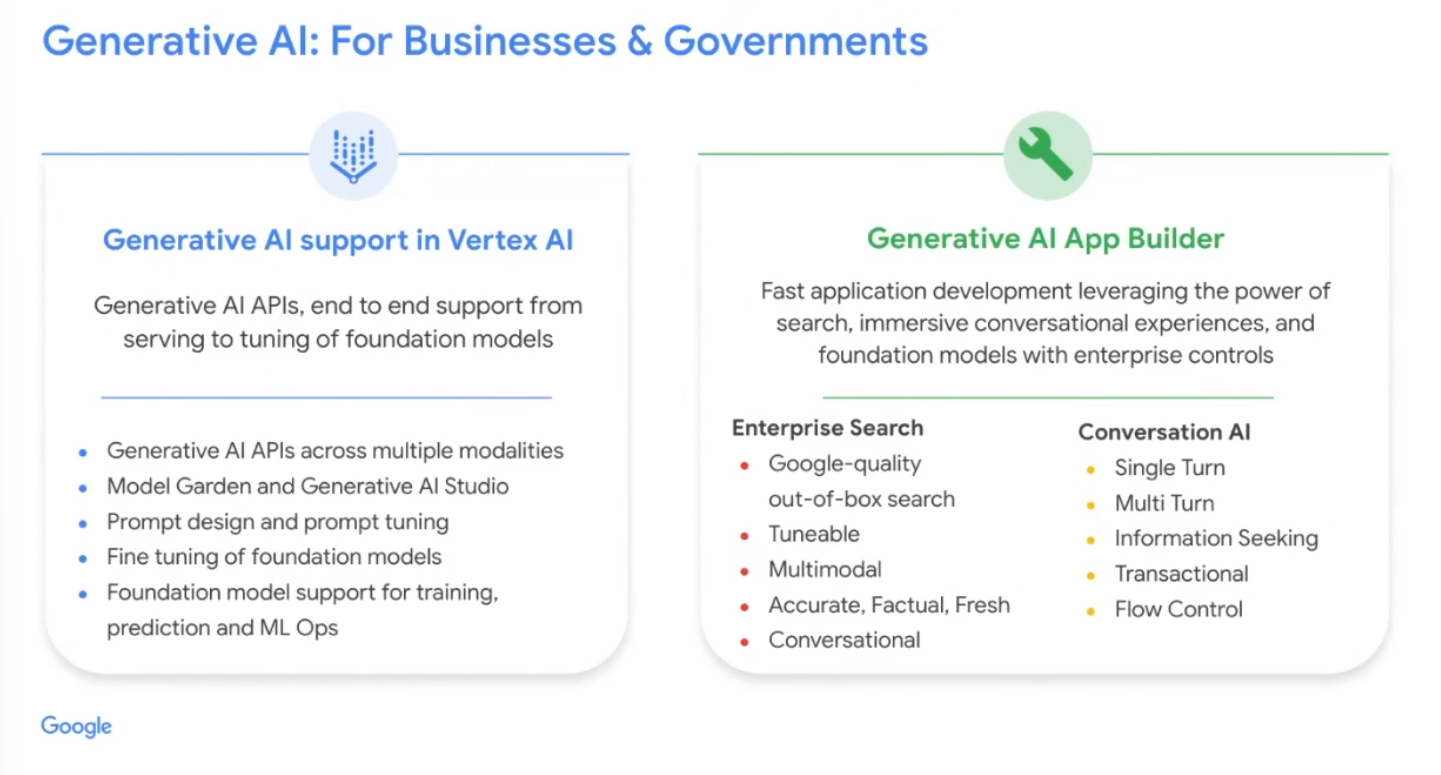

Google is also bringing support for generative AI to Vertex AI, its platform for building and deploying ML models, and launching its Generative AI App Builder, a new service that will help developers ship bots, chat interfaces, digital assistants and custom search engines.

“This is the first time we’re taking on new generative AI models and making them directly accessible through an API to the developer community Google's Thomas Kurian

Like with its new AI tools in Workspace, these new features will first roll out to a limited set of developers and will only be available in Google’s North America data centers. Some customers already using these tools include Toyota, Mayo Clinic and Deutsche Bank.

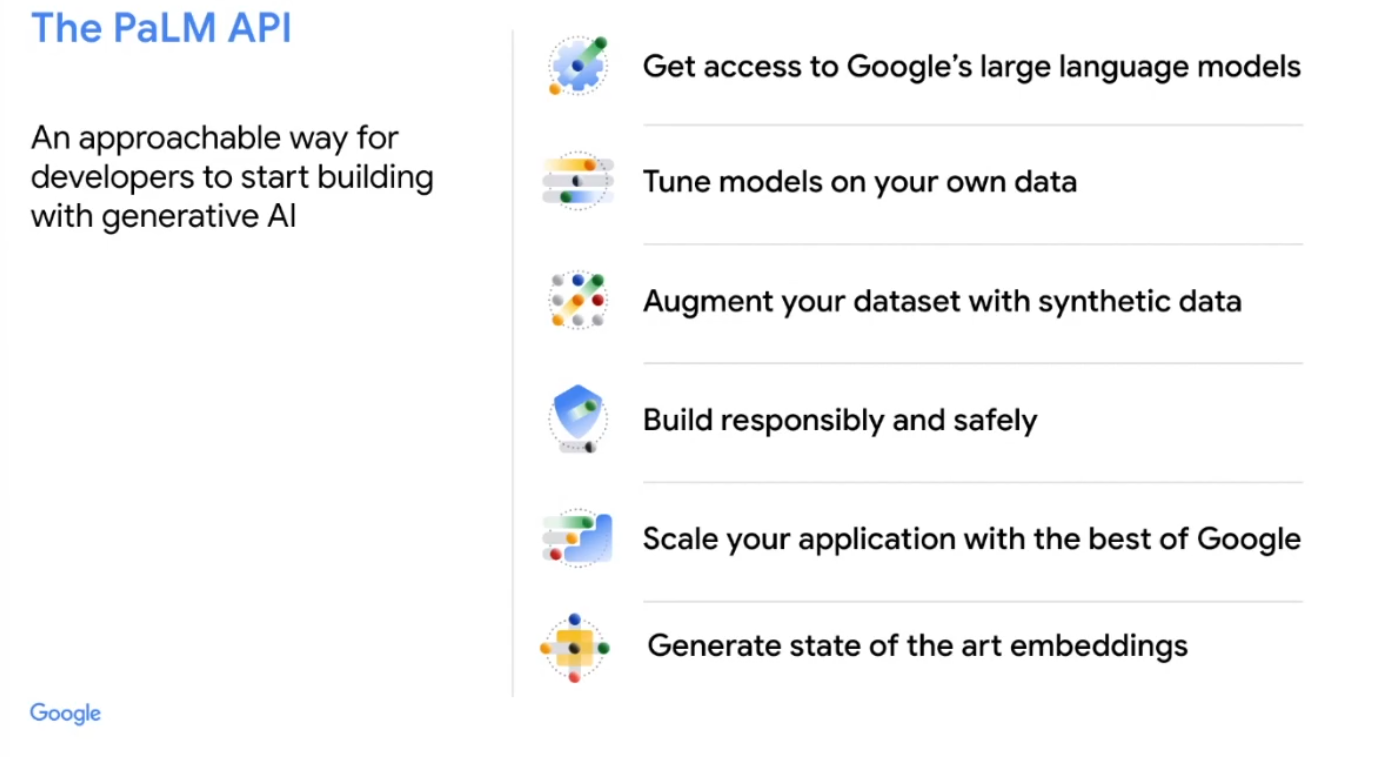

There’s a lot to unpack here, so let’s start at the beginning. The PaLM API is at the core of today’s announcements. While Google has long worked on the PaLM model, the company describes the PaLM API as Google’s gateway to access its large language models in general. “This is the first time we’re taking on new generative AI models and making them directly accessible through an API to the developer community,” Google Cloud CEO Thomas Kurian explained.

Kurian described the new API as an “extremely approachable way for developers to start building with generative AI.” He noted that the new API will give developers access to these foundation models, but also allow developers to tune and augment these models with their own data and augment their dataset with synthetic data “to build applications responsibly and safely, to scale the applications for serving or inferencing, using Google’s infrastructure, and to generate state of the art embeddings.”

Google says that starting today, it will make an “efficient model available in terms of size and capabilities” and that it will add other models and sizes soon. Why Google is not using a more generic name than ‘PaLM’ for this API is anyone’s guess, but the company is indeed making the PaLM model available through this API for multi-turn conversations and for single-turn general purpose use cases like text summarization and classification. A company spokesperson told me that Google chose PaLM for this first release “as it works particularly well for chat and text use cases.” Likely candidates for additional models are LaMDA and MUM.

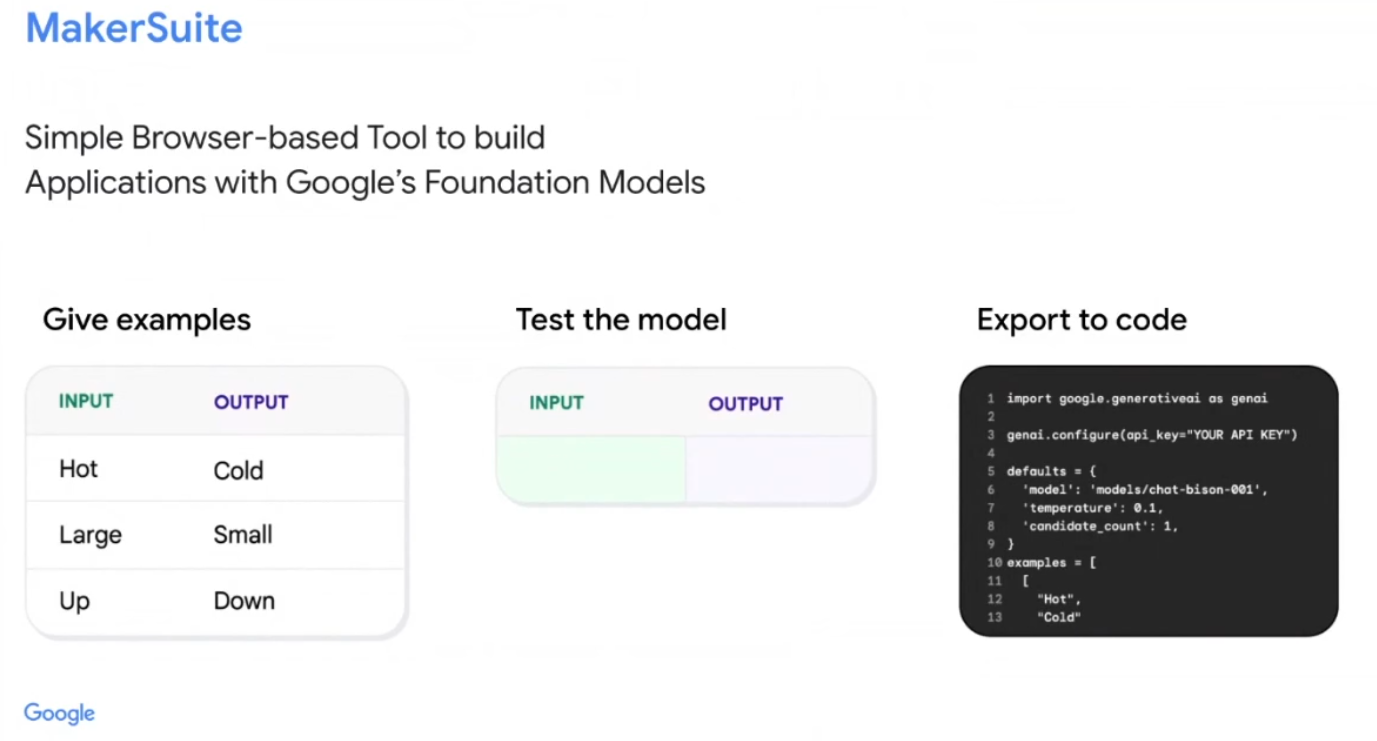

For developers who don’t want to delve into the API, Google is launching the low-code MakerSuite service. This service, too, will only be available to Trusted Testers and will make two models available to these developers: PaLM chat-bison-001 and PaLM text-bison-001. PaLM chat will be the tool for building chat-style, multi-turn applications while PaLM text is meant for single-turn input/output scenarios.

The idea here is to let developers give a number of examples to the tool to teach it what kinds of results they are looking for — and then test these and make them available as code, but Google provided very few details about how exactly this service will work in practice.

The company did spend a lot more time talking about Vertex AI and the Generative AI App builder, though. For Vertex AI, Google has always taking a platform approach, offering an end-to-end service for developers to build their AI models and applications. Now this includes access to foundation models for generating text and images. Over time, Kurian said, audio and video will be added as well. “The idea here is to let developers give a number of examples to the model and then quickly test these and make them available as code,” the company explained.

The Generative AI App Builder is an entirely new service. It will allow developers to build AI-powered chat interfaces and digital assistants based on their own data. “Generative AI Application Builder is a fast application development environment designed to allow business users– not necessarily just developers — but to allow business users to work in concert with developers to leverage the power of search, conversation experiences and foundation models, while respecting enterprise controls,” Kurian explained.

To do this, Google combined its foundation models with its enterprise search capabilities and its conversation AI for building single- and multi-turn conversations. Kurian noted that this could be used to retrieve information, but also — with the right hooks into a company’s APIs — to transact. He stressed that the users will get to control over the generative flow here. They can opt to give the large language model control of this flow or use a more deterministic flow (maybe in a customer service scenario), where there is no risk of the model going off piste.

Throughout its announcements, Google stressed that a company’s training data will always be kept private and not used to train the broader model. The focus here is also clearly on business users who want to augment the model with their own data and/or tune it for their use cases.

The one thing we didn’t see today was the public release of LaMDA, Google’s best-known model. It’s interesting that Google went with the PaLM model as the foundation for these services. It first announced PaLM a year ago. At the time, the company noted that that the Google Research team responsible for the model was looking to build a model that could “generalize across domains and tasks while being highly efficient.” With its 540-billion parameters, it’s a significantly larger model than OpenAI’s GPT3 with its 175 billion parameters.

What this means in practice, especially with GPT 3.5 now in the market and GPT4 likely launching soon, remains to be seen, though a year ago, Google said PaLM typically outperformed GPT3 in math questions, something large language models aren’t necessarily best at. And while that’s not the focus of the way Google is using PaLM in these current products, back then, the company also said that PaLM has shown “strong performance across coding tasks and natural language tasks in a single model, even though it has only 5% code in the pre-training dataset.”

Google Cloud gives developers access to its foundation models by Frederic Lardinois originally published on TechCrunch