Arthur, a machine learning monitoring startup, has benefited from the interest in generative AI this year, and it has been developing tools to help companies work with LLMs more effectively. Today it is releasing Arthur Bench, an open source tool to help users find the best LLM for a particular set of data.

Adam Wenchel, CEO and co-founder at Arthur says that the company has seen a lot of interest in generative AI and LLMs, and so they have been putting a lot of effort into creating products.

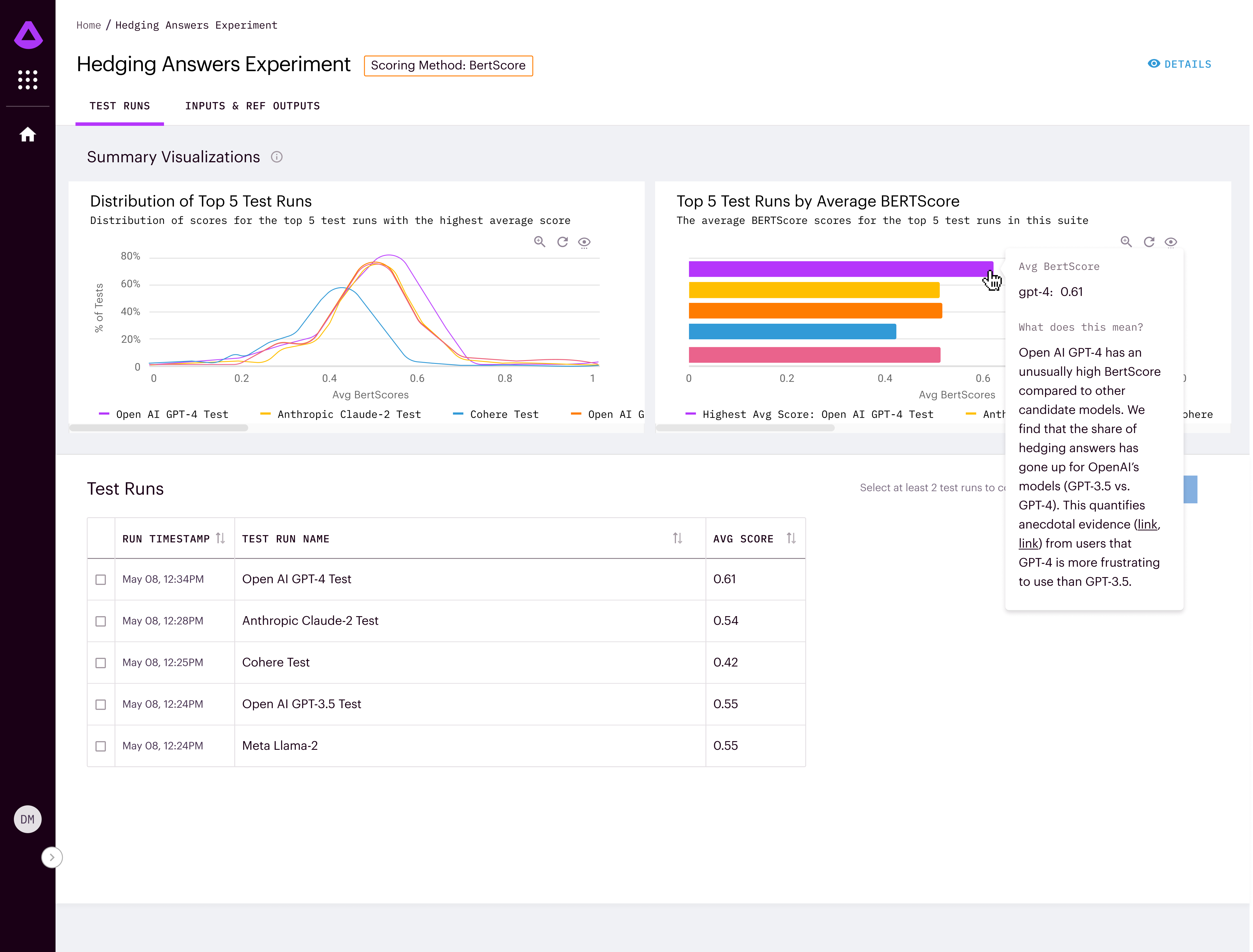

He says that today, and granted we are less than a year since the release of ChatGPT, that companies don’t have an organized way to measure the effectiveness of one tool against another, and that’s why they created Arthur Bench.

“Arthur Bench solves one of the critical problems that we just hear with every customer which is [with all of the model choices], which one is best for your particular application,” Wenchel told TechCrunch.

It comes with a suite of tools you can use to methodically test the performance, but the real value is that it allows you to test and measure how the types of prompts your users would use for your particular application will perform against different LLMs.

Image Credits: Arthur

“You could potentially test 100 different prompts, and then see how two different LLMs – like how Anthropic compares to OpenAI – on the kinds of prompts that your users are likely to use,” Wenchel said. What’s more, he says that you can do that at scale and make a better decision on which model is best for your particular use case.

Arthur Bench is being released today as an open source tool. There will also be a SaaS version for customers who don’t want to deal with complexity of managing the open source version, or who have larger test requirements, and are willing to pay for that. But for now, Wenchel said they are concentrating on the open source project.

The new tool comes on the heels of the release of Arthur Shield in May, a kind of LLM firewall that is designed to detect hallucinations in models, while protecting against toxic information and private data leaks.