Achieving autonomous driving safely requires near endless hours of training software on every situation that could possibly arise before putting a vehicle on the road. Historically, autonomy companies have collected hordes of real-world data with which to train their algorithms, but it’s impossible to train a system how to handle edge cases based on real-world data alone. Not only that, but it’s time consuming to even collect, sort and label all that data in the first place.

Most self-driving vehicle companies, like Cruise, Waymo and Waabi, use synthetic data for training and testing perception models with speed and a level of control that’s impossible with data collected from the real world. Parallel Domain, a startup that has built a data generation platform for autonomy companies, says synthetic data is a critical component to scaling the AI that powers vision and perception systems and preparing them for the unpredictability of the physical world.

The startup just closed a $30 million Series B led by March Capital, with participation from return investors Costanoa Ventures, Foundry Group, Calibrate Ventures and Ubiquity Ventures. Parallel Domain has been focused on the automotive market, supplying synthetic data to some of the major OEMs that are building advanced driver assistance systems and autonomous driving companies building much more advanced self-driving systems. Now, Parallel Domain is ready to expand into drones and mobile computer vision, according to co-founder and CEO Kevin McNamara.

“We’re also really doubling down on generative AI approaches for content generation,” McNamara told TechCrunch. “How can we use some of the advancements in generative AI to bring a much broader diversity of things and people and behaviors into our worlds? Because again, the hard part here is really, once you have a physically accurate renderer, how do you actually go build the million different scenarios a car is going to need to encounter?”

The startup also wants to hire a team to support its growing customer base across North America, Europe and Asia, according to McNamara.

Virtual world building

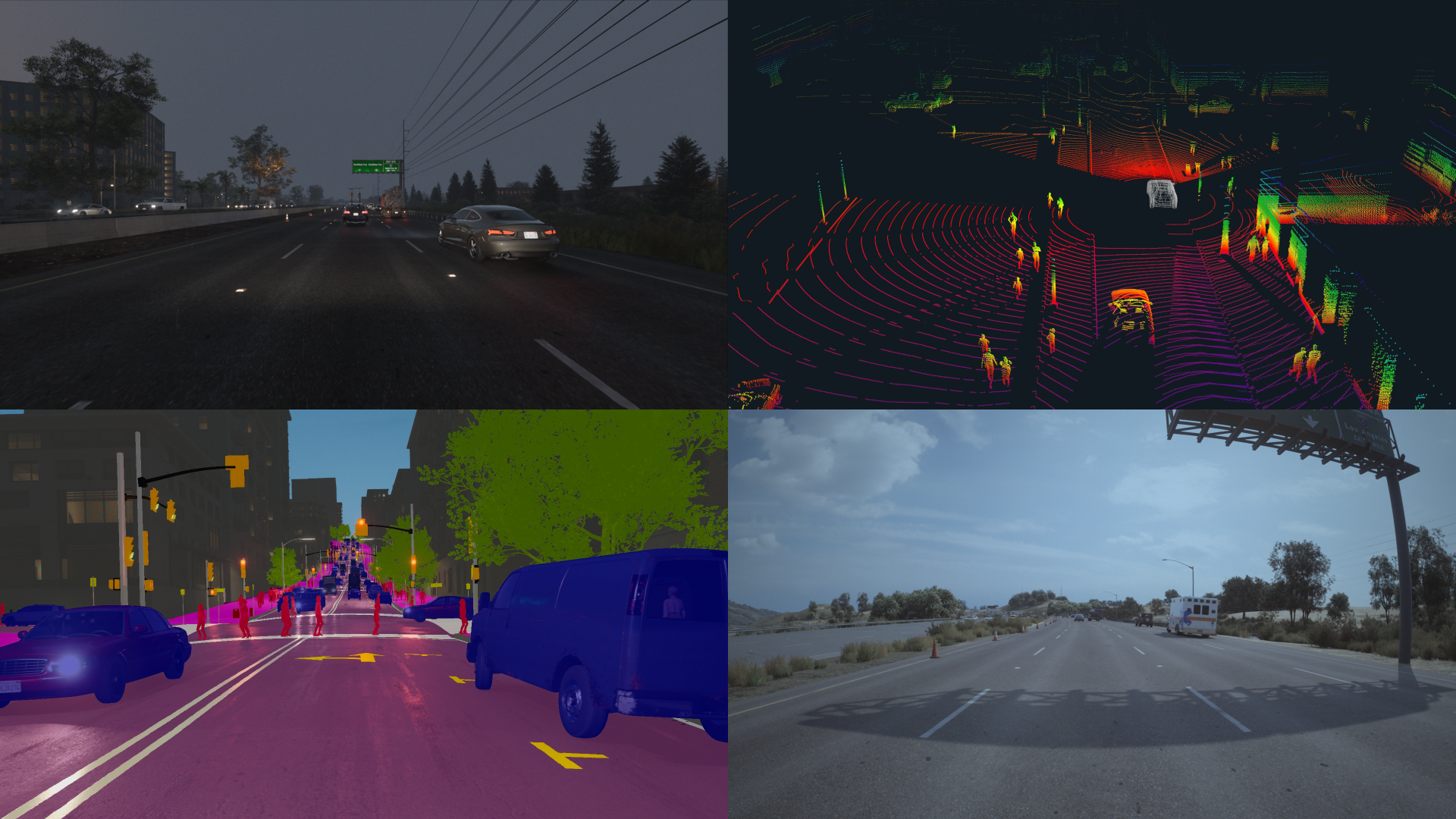

A sample of Parallel Domain’s synthetic data. Image Credit: Parallel Domain

When Parallel Domain was founded in 2017, the startup was hyper focused on creating virtual worlds based on real-world map data. Over the past five years, Parallel Domain has added to its world generation by filling it with cars, people, different times of day, weather and all the range of behaviors that make those worlds interesting. This enables customers — of which Parallel Domain counts Google, Continental, Woven Planet and Toyota Research Institute — to generate dynamic camera, radar and lidar data that they would need to actually train and test their vision and perception systems, said McNamara.

Parallel Domain’s synthetic data platform consists of two modes: training and testing. When training, customers will describe high level parameters — for example, highway driving with 50% rain, 20% at night and an ambulance in every sequence — on which they want to train their model and the system will generate hundreds of thousands of examples to meet those parameters.

On the testing side, Parallel Domain offers an API that allows the customer to control the placement of dynamic things in the world, which can then be hooked up to their simulator to test specific scenarios.

Waymo, for example, is particularly keen on using synthetic data to test for different weather conditions, the company told TechCrunch. (Disclaimer: Waymo is not a confirmed Parallel Domain customer.) Waymo sees weather as a new lens it can apply to all the miles it has driven in real world and in simulation, since it would be impossible to recollect all those experiences with arbitrary weather conditions.

Whether it’s testing or training, whenever Parallel Domain’s software creates a simulation, it is able to automatically generate labels to correspond with each simulated agent. This helps machine learning teams do supervised learning and testing without having to go through the arduous process of labeling data themselves.

Parallel Domain envisions a world in which autonomy companies use synthetic data for most, if not all, of their training and testing needs. Today, the ratio of synthetic to real world data varies from company to company. More established businesses with the historical resources to have collected lots of data are using synthetic data for about 20% to 40% of their needs, whereas companies that are earlier in their product development process are relying 80% on synthetic versus 20% real world, according to McNamara.

Julia Klein, partner at March Capital and now one of Parallel Domain’s board members, said she thinks synthetic data will play a critical role in the future of machine learning.

“Obtaining the real world data that you need to train computer vision models is oftentimes an obstacle and there’s hold ups in terms of being able to get that data in, to label that data, to get it ready to a position where it can actually be used,” Klein told TechCrunch. “What we’ve seen with Parallel Domain is that they’re expediting that process considerably, and they’re also addressing things that you may not even get in real world datasets.”

Parallel Domain says autonomous driving won’t scale without synthetic data by Rebecca Bellan originally published on TechCrunch